Revolutionizing Great Barrier Reef Simulations with GPU Computing on LUMI

Ocean Models to Protect the Great Barrier Reef

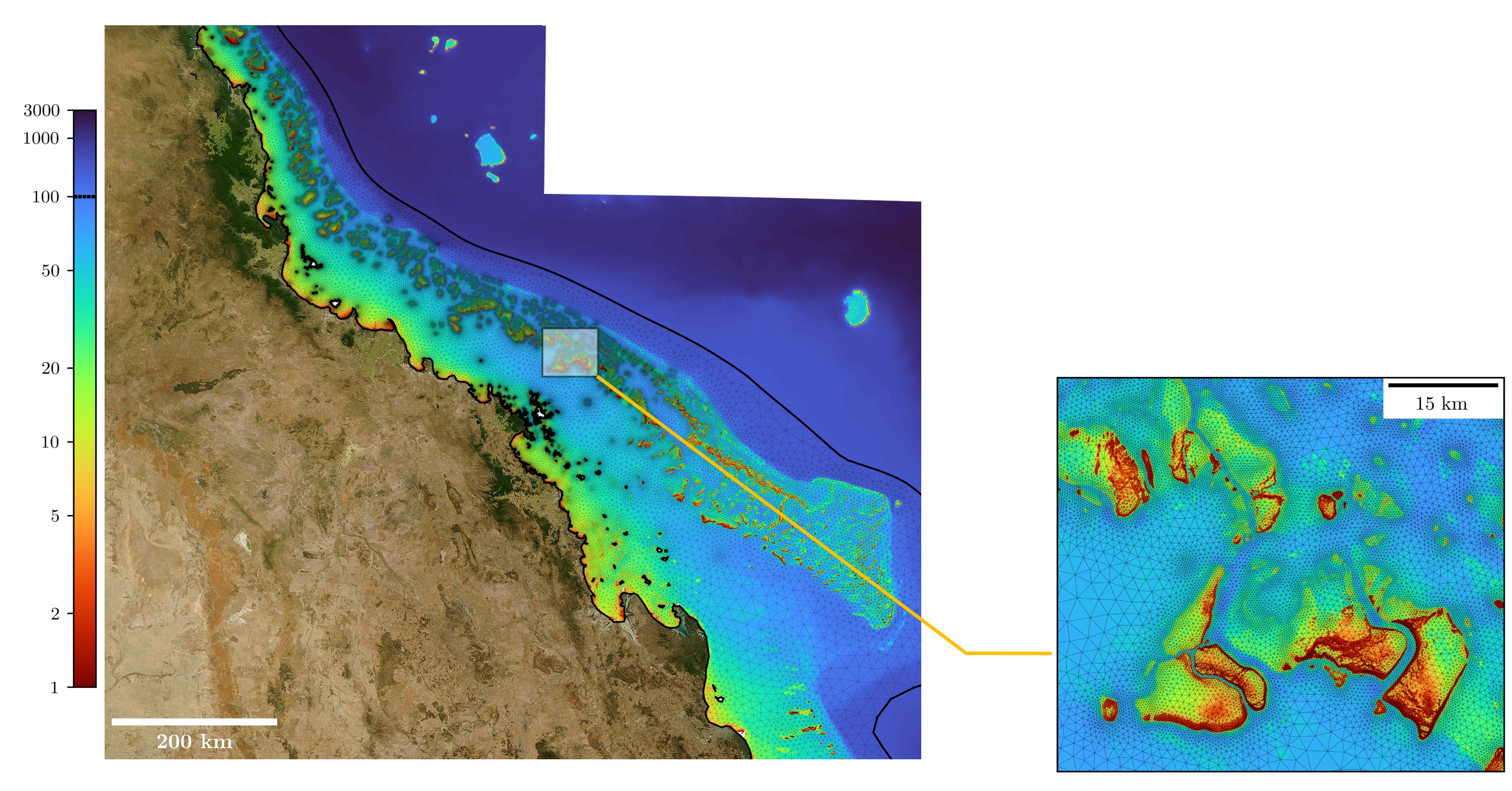

When you think of Australia, you think of the Great Barrier Reef—a complex network of reef structures along the northeast coast. The Great Barrier Reef (GBR) spans an area of 2,000 km long and is home to over a thousand species of fish and hundreds of species of coral, offering breathtaking maritime scenery and a unique biodiversity. As the GBR is the world's most extensive coral reef ecosystem, it is crucial to understand and protect it. But how do you decide where to focus the efforts in conservation? Ocean modelling can be an excellent tool to gain insights to protect this ecosystem.

Prof. Emmanuel Hanert (UCLouvain) and his team (with, among others, Miguel De Le Court and Jonathan Lambrechts) developed the multiscale ocean model SLIM. The model has been applied worldwide, from the Bahamas to the Persian Gulf. Depending on the complexity of the flow that needs to be simulated, SLIM has a range of modules to describe currents in the deep ocean, from simple 1D models to complex 3D ones. SLIM can also model the transport of larvae, debris or the dynamics of pollutants and sediments in ocean waters.

Prof. Emmanuel Hanert: “For the Great Barrier Reef, we use SLIM not only to simulate ocean currents but also to study reef connectivity —the exchange of living material between reefs. Corals, which form reefs, are fixed in place, but when they reproduce, they release larvae that drift with the currents. Since we cannot directly track these larvae, the only way to understand their dispersal is through simulations. By modelling larval transport, we can determine how reefs are interconnected—identifying which reefs provide larvae to others. This forms a network, or connectivity graph, mapping these relationships. Analysing this graph helps us pinpoint key reefs crucial in sustaining others by providing large numbers of larvae. This insight is vital for conservation efforts, allowing us to prioritise protecting reefs essential for the resilience and regeneration of the entire ecosystem.”

Bridging the Gap: Multiscale Modelling for a Complex Reef System

SLIM is also a multiscale model, which is essential for simulating the hydrodynamics of the Great Barrier Reef, a vast and complex system. Understanding both small- and large-scale processes requires an approach that can capture interactions across different spatial scales. To protect and restore the reef, it is crucial to analyse the system at multiple levels: from the broad scale (~1000 km), encompassing clusters of reefs (10-100 km), down to individual reefs (0.1-1 km) and even finer scales where different coral species interact. While detailed knowledge exists at these fine scales, effective conservation measures and regulations must be implemented at larger scales. A multiscale modelling approach bridges this gap, enabling the simulation of ocean currents and reef dynamics from the entire system down to the behaviour of individual reefs, ensuring a comprehensive understanding of reef processes.

Existing three-dimensional models, limited by computational constraints, could achieve only coarse resolutions of ~4 kilometres, which missed critical details essential for understanding processes like coral larvae dispersal and cold-water upwelling.

Leveraging GPUs for Unprecedented Resolution

Prof. Emmanuel Hanert: “To obtain a higher resolution, we completely redesigned our multiscale ocean model to run on GPUs. Discussions with the CECI teams highlighted the potential of GPUs and reinforced the decision to adapt SLIM to fully leverage the capabilities of modern HPC clusters like Lucia and LUMI.”

The redesign involved a two-year overhaul of the code, transitioning from a CPU-based to GPU-based architecture, resulting in a more than 100-fold increase in computational speed. Prof. Emmanuel Hanert: “With this redesign, SLIM scales exceptionally well on hundreds of GPUs, allowing us to conduct full 3D simulations of the GBR at an unprecedented horizontal resolution of ~100 meters. This advancement provides a detailed and accurate representation of fine-scale dynamics across the entire reef system.”

A powerful example of how 3D modelling enhances our understanding of physical processes is the study of upwelling—the vertical movement of water that brings cooler, nutrient-rich water from deeper layers to the surface. Recent observations in the GBR revealed that some reefs appear more resistant to bleaching, but the reason remained unclear. It was later discovered that these reefs are positioned in upwelling zones, where vertical currents provide a cooling effect, mitigating heat stress. High-resolution 3D models are essential to analyse these dynamics.

Prof. Emmanuel Hanert: “A recent study published in Science used a 4 km resolution model to identify broad upwelling patterns. However, to determine precisely which reefs benefit from this effect, we aim to increase the resolution to 100 meters—40 times finer—allowing for a much more detailed simulation of vertical water movement at the scale of individual reefs. This level of precision is crucial for targeted conservation strategies, helping to identify and protect naturally resilient reefs in the face of climate change.”

Scaling up: From Local Clusters to LUMI

The development of the GPU version of the SLIM model was spurred by the transition from Wallon Tier-1 CPU-only cluster Zenobe to the new cluster Lucia, which has a large GPU partition, both operated by CENAERO.

Prof. Emmanuel Hanert: “Initially, GPU usage was relatively low, allowing us to run simulations efficiently with minimal wait times. However, as demand increased, access to the GPUs became more competitive, resulting in longer queue times and reduced availability.”

The team realised that once the code ran on GPUs, it could also run on other large HPC clusters. They initially submitted a preparatory project for LUMI, currently the biggest European supercomputer, securing a limited number of hours to conduct scaling tests. After confirming the approach was effective, they applied for a regular project. A similar application was made for the Meluxina supercomputer in Luxembourg through a EuroHPC JU call.

From CPU to GPU a Long-Term Investment

Prof. Emmanuel Hanert: “Applying for a preparatory project on LUMI is a straightforward process, providing an excellent opportunity to gain computing time and conduct scaling analyses. The best approach is to take the initiative—rather than hesitating, just dive in and start experimenting. Challenges and bugs are inevitable but part of the learning process. It is great to see the extensive support available, including consulting services, local assistance, and training sessions across Europe. Researchers, especially those eager to learn, can access the resources needed to develop new skills and successfully port their code from CPU to GPU. While this transition requires time and effort, it is a long-term investment that yields significant performance benefits, making it a worthwhile endeavour.”

Adapting to New Hardware

When upscaling from LUCIA to LUMI, the team went from Nvidia GPUs to AMD GPUs with LUMI. However, the programming approach was designed to be flexible across different hardware infrastructures, allowing the same code to be compiled and executed on NVIDIA and AMD GPUs with minimal modifications. While certain libraries may not be universally available and require minor adjustments, these issues are typically resolved within a few days.

Miguel De Le Court: “Regarding the two-architectures problem, we wrote our own light abstraction layer that provides a common interface for CUDA, HIP and the CPU (about 300 lines long). The idea is that GPU kernel functions are written as device lambdas, which are passed to either a CUDA kernel of a HIP kernel or to a CPU for loop. The rest wraps the memory allocations, event and streams, synchronisations, etc... This is somewhat similar to what Kokkos does (although much simpler), allowing the same code to be used regardless of the device. We didn't choose Kokkos mainly because of the need for shared and constant memory, which is a pain.”

Prof. Hanert's team could also count on the LUMI support team for help. Miguel De Le Court: “In the initial phase of compiling SLIM on LUMI GPUs, the experience with support was consistently positive. I received prompt responses multiple times per day, and ultimately, all issues were resolved.”

LUMI Accelerating Research

Miguel De Le Court: “We are very pleased to be working with the LUMI supercomputer, as it has allowed us to run significantly larger simulations, it enabled us to use up to 1024 GPUs (for benchmarking) and around 256 for real simulations, which is not possible on LUCIA since it has 256 GPUs in total. On LUMI, accessing these resources remains remarkably straightforward, making high-performance computing much more efficient.”

Prof. Emmanuel Hanert: “We truly appreciate the opportunity to use such an advanced infrastructure like LUMI. Running on one of the world's top six supercomputers is an incredible experience—like driving one of the fastest cars in the world. It’s both exciting and immensely valuable for our research.”

Published on 12/03/2025

- Picture by author Toby Hudson

- Ocean Model SLIM: https://www.slim-ocean.be

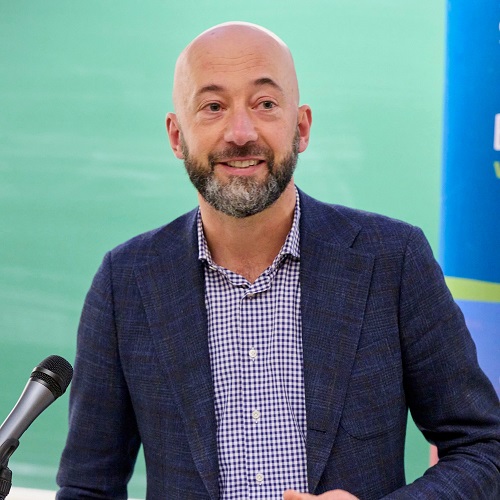

Prof. Emmanuel Hanert (UCLouvain)

Emmanuel Hanert is full professor of environmental modelling at UCLouvain. He holds a PhD in Physics from UCLouvain (2004) and leads a group of 13 doctoral and post-doctoral researchers working on the development and application of multiscale coastal ocean models to simulate the dynamics of currents and waves, and the transport of oil, sediments and biological material. We are particularly interested in marine connectivity, environmental impact assessment and coastal vulnerability. More information about Emmanuel and his team here.

Jonathan Lambrechts

Jonathan Lambrechts is a senior researcher at UCLouvain. He holds a PhD in Applied Sciences from UCLouvain (2011) and supervises all the developments of the ocean model SLIM.

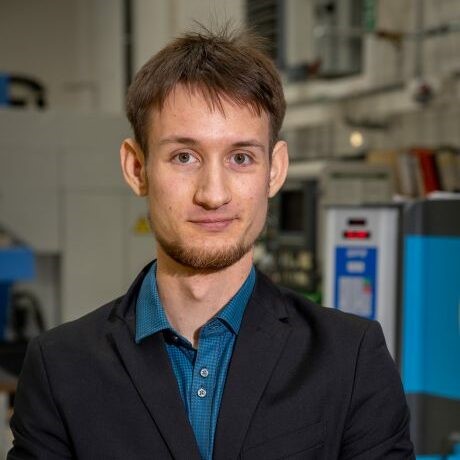

Miguel De Le Court

Miguel De Le Court is a PhD student at UCLouvain. Under the supervision of Jonathan, he developed the new GPU-based architecture of the ocean model SLIM.